Large-Scale Supervised Learning For

3D Point Cloud Labeling: Semantic3d.Net

Timo Hackel, Jan D. Wegner, Nikolay Savinov, Lubor Ladicky, Konrad Schindler, and Marc Pollefeys

Abstract

In this paper we review current state-of-the-art in 3D point

cloud classification, present a new 3D point cloud classifica-

tion benchmark data set of single scans with over four billion

manually labeled points, and discuss first available results

on the benchmark. Much of the stunning recent progress in

2D image interpretation can be attributed to the availability

of large amounts of training data, which have enabled the

(supervised) learning of deep neural networks. With the data

set presented in this paper, we aim to boost the performance

of

CNNs

also for 3D point cloud labeling. Our hope is that this

will lead to a breakthrough of deep learning also for 3D (geo-)

data. The semantic3D.net data set consists of dense point

clouds acquired with static terrestrial laser scanners. It con-

tains eight semantic classes and covers a wide range of urban

outdoor scenes, including churches, streets, railroad tracks,

squares, villages, soccer fields, and castles. We describe our

labeling interface and show that, compared to those already

available to the research community, our data set provides

denser and more complete point clouds, with a much higher

overall number of labeled points. We further provide descrip-

tions of baseline methods and of the first independent sub-

missions, which are indeed based on

CNNs

, and already show

remarkable improvements over prior art. We hope that seman-

tic3D.net will pave the way for deep learning in 3D point

cloud analysis, and for 3D representation learning in general.

Introduction

Neural networks have made a spectacular comeback in im-

age analysis since the seminal paper of (Krizhevsky

et al.,

2012), which revives earlier work of Fukushima (1980) and

LeCun

et al.,

(1989). Especially deep convolutional neural

networks (

CNNs

) have quickly become the core technique for a

whole range of learning-based image analysis tasks. The large

majority of state-of-the-art methods in computer vision and

machine learning now include

CNNs

as one of their essential

components. Their success for image-interpretation tasks is

mainly due to (i) easily parallelisable network architectures

that facilitate training from millions of images on a single

GPU, and (ii) the availability of huge public benchmark data

sets like ImageNet (Deng

et al.,

2009; Russakovsky

et al.,

2015) and Pascal

VOC

(Everingham

et al.,

2010) for RGB im-

ages, or SUN

RGB-D

(Song

et al.,

2015) for

RGB-D

data.

While

CNNs

have been a great success story for image inter-

pretation, they have not yet made a comparable impact for 3D

point cloud interpretation. What makes supervised learning

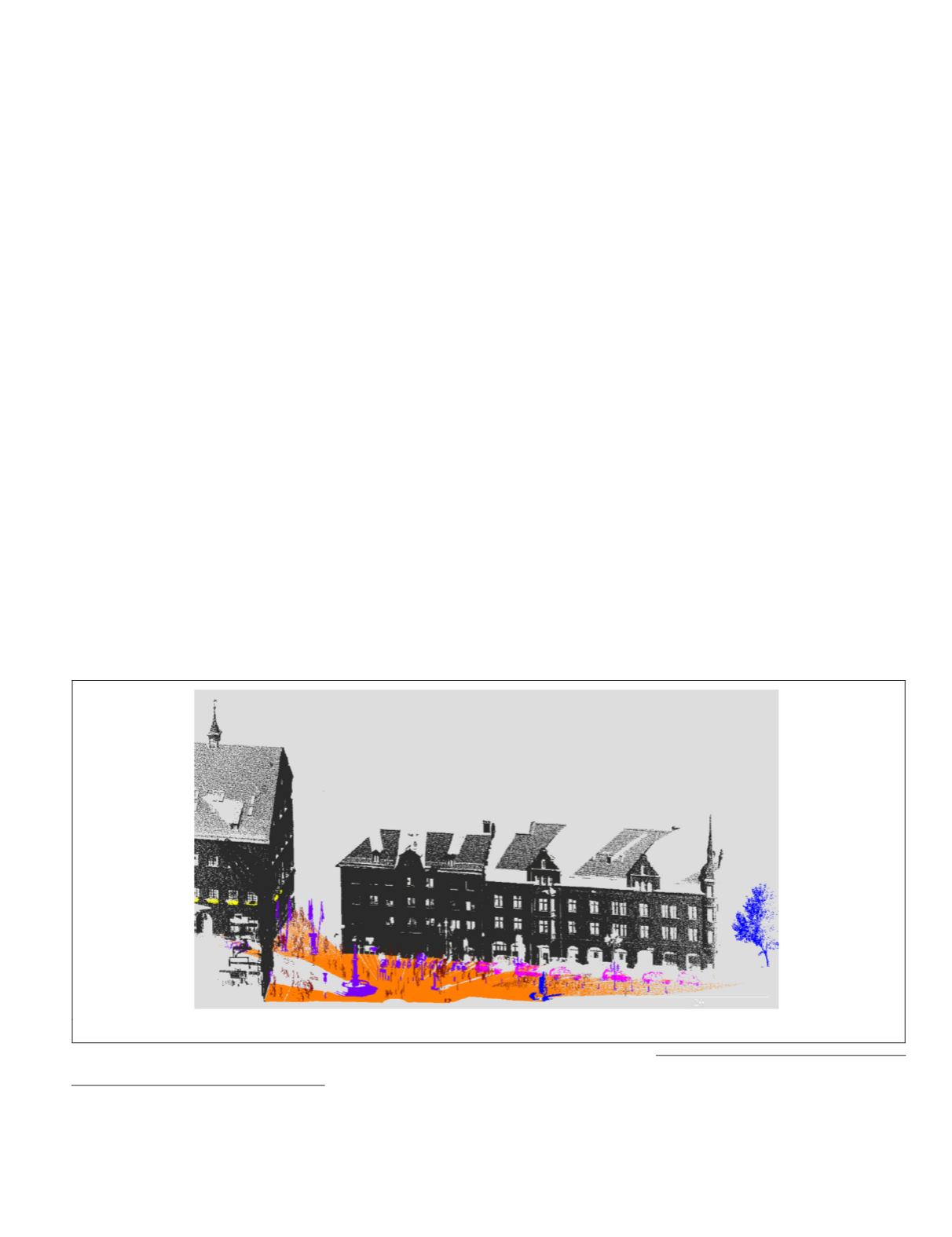

hard for 3D point clouds is the sheer size of millions of points

per data set, and the irregular, not grid-aligned, and in places

very sparse distribution of the data, with strongly varying

point density (Figure 1).

While recording point clouds is nowadays straight-

forward, the main bottleneck is to generate enough manu-

ally labeled training data, needed for contemporary (deep)

Timo Hackel, Jan D. Wegner, and Konrad Schindler are with

IGP, ETH Zurich, Switzerland (

).

Nikolay Savinov, Lubor Ladicky, and Marc Pollefeys are with

CVG, ETH Zurich, Switzerland.

Photogrammetric Engineering & Remote Sensing

Vol. 84, No. 5, May 2018, pp. 297–308.

0099-1112/18/297–308

© 2018 American Society for Photogrammetry

and Remote Sensing

doi: 10.14358/PERS.84.5.297

Figure 1. Example point cloud from the benchmark dataset, where colors indicate class labels.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

May 2018

297