the remaining points are considered as test samples. Each

experiment was done ten times (with ten different random

training set) and the averages of obtained results are reported.

Two different classifiers were used for classification of

data: maximum likelihood (

ML

) and support vector machine

(

SVM

). The

ML

is a parametric classifier that is sensitive to

the number of training samples while the

SVM

classifier has

less sensitivity to the training sample size. The third-degree

polynomial kernel is used for the

SVM

classification due to

the Library for Support Vector Machines (

LIBSVM

) tool (Chang

and Linin, 2008). The default values which are defined in the

LIBSVM

were used as parameters of polynomial kernel. The

one-against-one multiclass classification algorithm was used

in our experiments.

We used some measures for evaluation of feature extraction

methods: Average accuracy (the mean of class specific accura-

cies), average reliability (reliability for each class is defined as:

the number of test samples that are correctly classified divid-

ed to the total samples that are classified in that class), overall

accuracy (the percentage of correctly predicted samples of the

total test samples), and kappa coefficient (Congalton

et al.

,

1983). We also use the McNemars test for assessment of sta-

tistical significance of differences in the classification results

(Foody, 2004). The difference in the accuracy between two

classifiers is statistically significant if |

Z

12

|>1.96. The sign of

parameter

Z

12

indicates whether classifier 1 is more accurate

than classifier 2 (

Z

12

>0) or vice versa (

Z

12

<0).

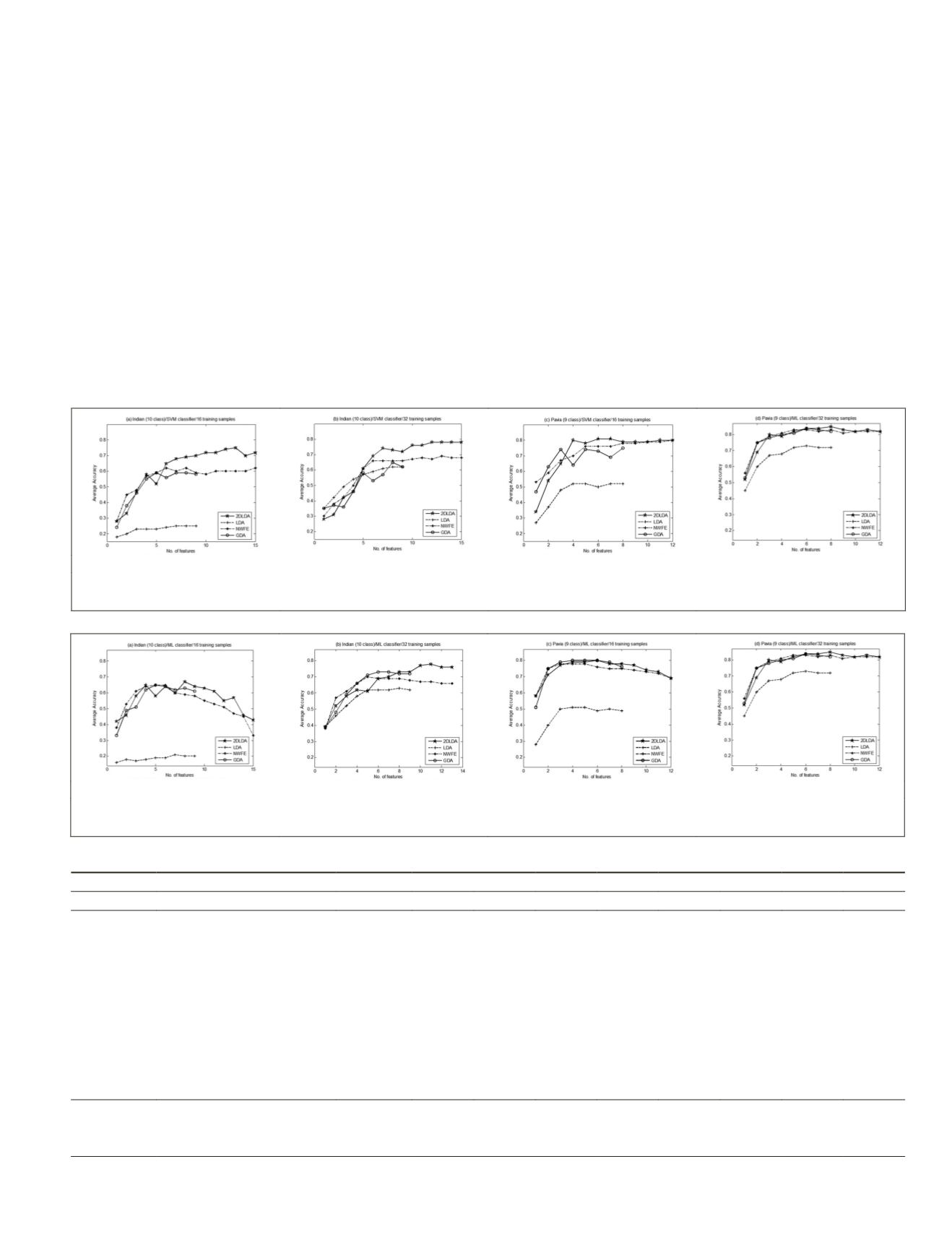

The average accuracy versus the number of extracted

features for the Indian and Pavia datasets obtained by the

2DLDA

,

LDA

,

GDA

, and

NWFE

methods, the

SVM

classifier, and

with using 16 and 32 training samples are shown in Figure 2.

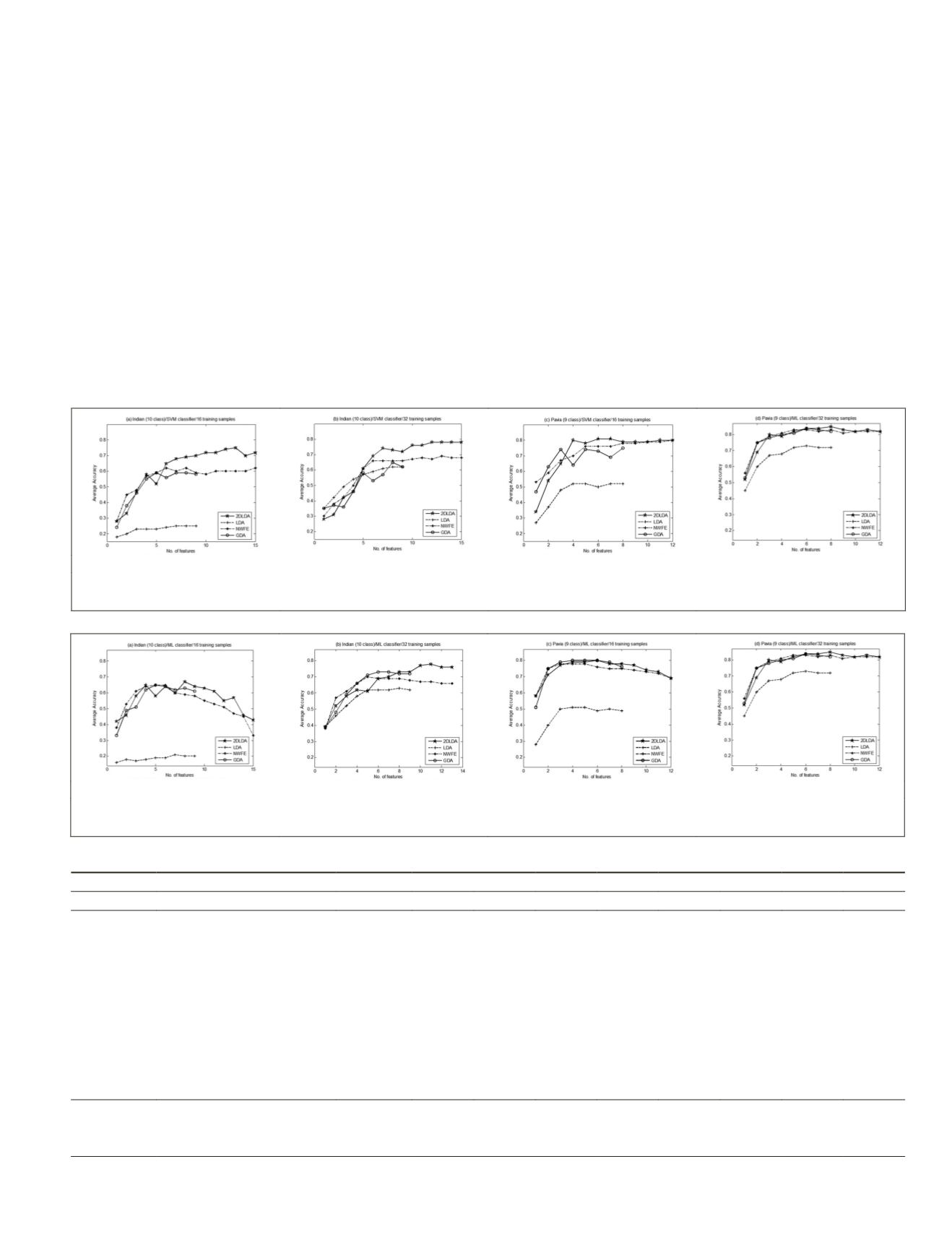

The obtained results by the

ML

classifier are shown in Figure

3. The classification accuracy (Acc.) and reliability (Rel.) of all

classes, average accuracy, average reliability, overall accuracy

and kappa coefficient obtained by the

SVM

classifier, 16 train-

ing samples and 9 extracted features for the Indian dataset are

shown in Table 2. An intuitive view for the Indian image and

the classification maps obtained by the

SVM

classifier, 16 train-

ing samples and 9 features are shown in Figure 4. The results

of classification for the Indian dataset with the

ML

classifier,

(a)

(b)

(c)

(d)

Figure 2. The average accuracy obtained by the SVM classifier: (a) the Indian dataset: 16 training samples, (b) the Indian dataset: 32

training samples, (c) the Pavia dataset: 16 training samples, and (d) the Pavia dataset: 32 training samples.

(a)

(b)

(c)

(d)

Figure 3. The average accuracy obtained by the ML classifier: (a) the Indian dataset: 16 training samples, (b) the Indian dataset: 32 train-

ing samples, (c) the Pavia dataset: 16 training samples, and (d) the Pavia dataset: 32 training samples.

T

able

2. T

he

R

esults

of

C

lassification

A

ccuracy

O

btained

by

the

SVM C

lassifier

, 16 T

raining

S

amples

,

and

N

ine

F

eatures

for

the

I

ndian

D

ataset

class

2DLDA

LDA

NWFE

GDA

No. of classes

Name of class

# samples Acc.

Rel.

Acc.

Rel.

Acc.

Rel.

Acc.

Rel.

1

Corn-no till

1434

0.60

0.53

0.19

0.19

0.56

0.56

0.51

0.43

2

Corn-min till

834

0.65

0.40

0.21

0.15

0.50

0.39

0.65

0.32

3

Grass/pasture

497

0.92

0.63

0.14

0.13

0.61

0.51

0.53

0.46

4

Grass/trees

747

0.89

0.80

0.26

0.24

0.72

0.75

0.76

0.80

5

Hay-windrowed

489

0.98

0.96

0.49

0.61

0.98

1.00

0.99

0.97

6

Soybeans-no till

968

0.62

0.60

0.24

0.16

0.40

0.37

0.47

0.54

7

Soybeans-min till

2468

0.42

0.72

0.18

0.26

0.43

0.61

0.30

0.60

8

Soybeans-clean till

614

0.69

0.48

0.34

0.19

0.49

0.35

0.50

0.42

9

Woods

1294

0.67

0.97

0.17

0.45

0.53

0.81

0.57

0.82

10

Bldg-Grass-Tree-Drives

380

0.61

0.46

0.29

0.17

0.67

0.28

0.57

0.25

Average Acc. and Average Rel.

0.70

0.66

0.25

0.26

0.59

0.56

0.58

0.56

Overall Acc.

0.83

0.64

0.79

0.78

Kappa coefficient

0.58

0.12

0.48

0.46

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

October 2015

781