Automatic Co-Registration of Pan-Tilt-Zoom (PTZ)

Video Images with 3D Wireframe Models

Ravi Ancil Persad, Costas Armenakis, and Gunho Sohn

Abstract

We propose an algorithm for the automatic co-registration of

Pan-Tilt-Zoom (

PTZ

) camera video images with 3

D

wireframe

models. The proposed method automatically retrieves chang-

ing camera focal length and angular parameters, due to the

motion of

PTZ

cameras by matching linear features between

PTZ

video images and 3

D

CAD

wireframe models. The devel-

oped feature-matching schema is based on a novel evidence-

based hypothesis-verification optimization framework referred

to as Line-based Randomized

RAN

dom

SA

mple Consensus

(

LR-RANSAC

).

LR-RANSAC

introduces a fast and stable pre-verifi-

cation test into the optimization process to avoid unnecessary

verification of erroneous hypotheses. An evidence-based veri-

fication follows to optimally select the

PTZ

camera parameters,

where an original line-based approach for full-verification,

-exploiting local geometrical cues on the image scene-, evalu-

ates the pre-verified hypotheses. Tests on an indoor dataset

produced a 0.06 mm error in focal length estimation and rota-

tional errors in the order of 0.18° to 0.24°. Experiments on the

outdoor dataset resulted in a 0.07 mm error for focal length

and rotational errors ranging from 0.19° to 0.30°.

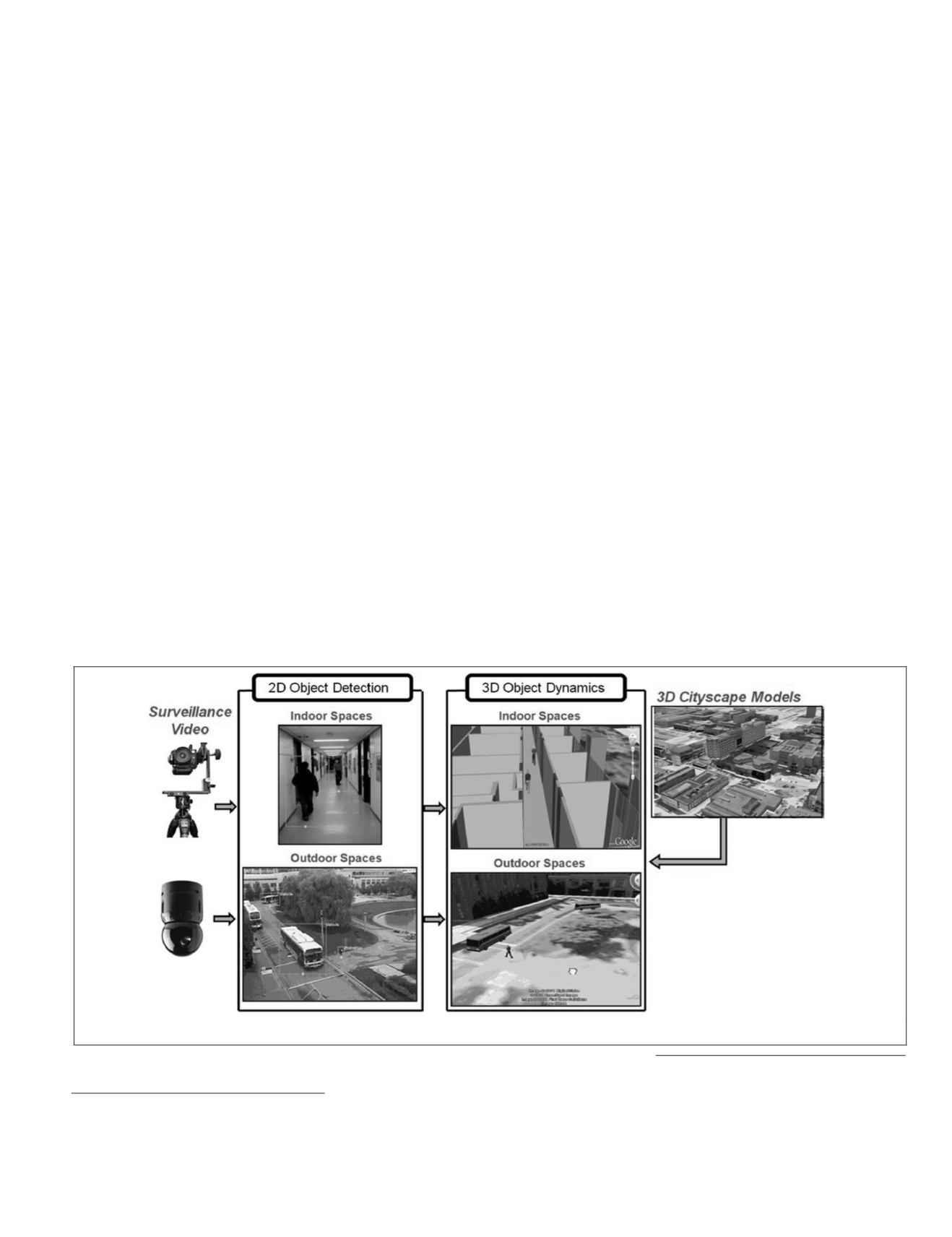

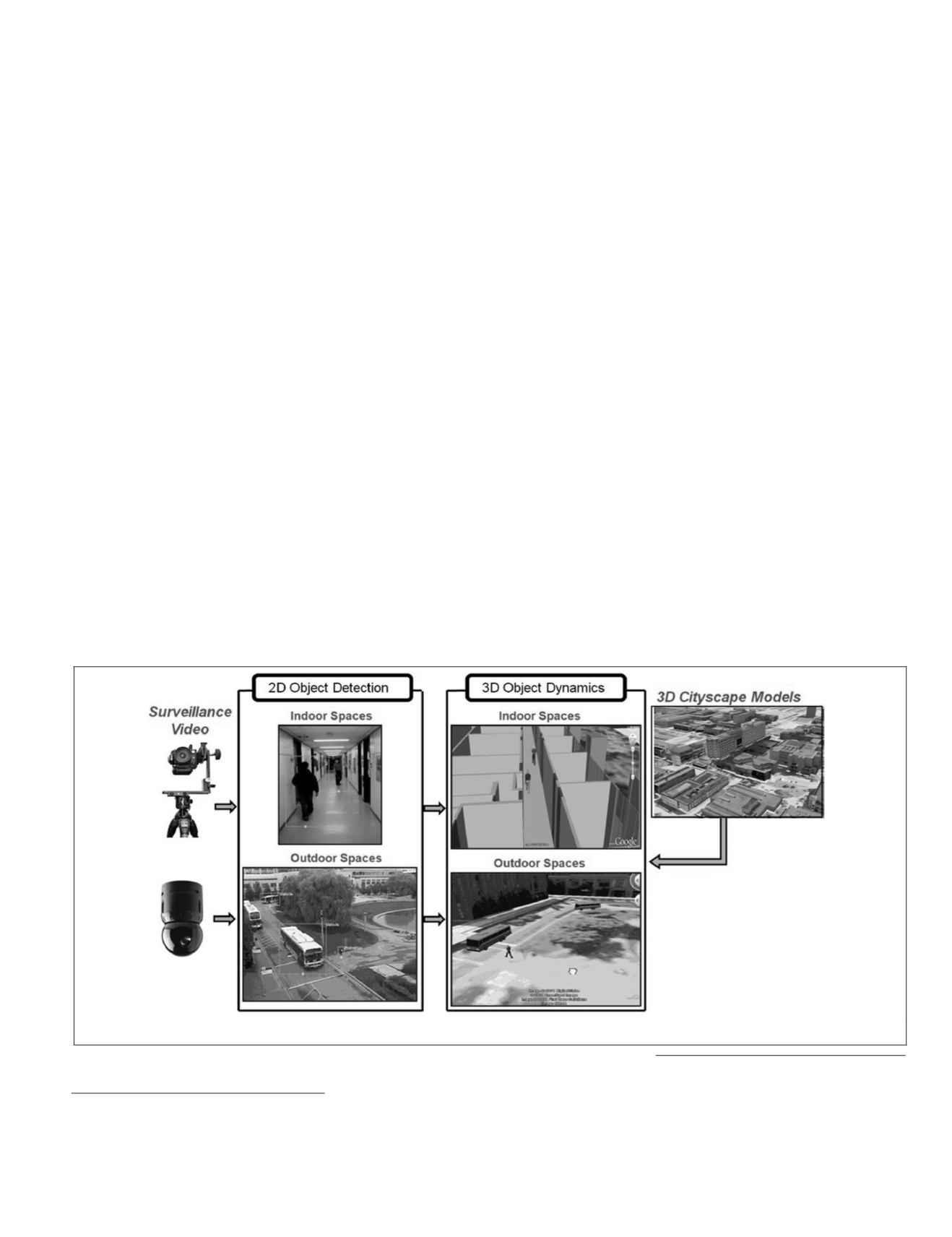

Introduction

Indoor and outdoor video images from surveillance monitor-

ing systems are used in computer vision tasks such as event

detection and tracking moving objects (e.g., pedestrians and

vehicles) (Adam

et al

., 2008; Leibe

et al

., 2008). The projec-

tion of 2

D

object positions, detected from monocular single

images, into the 3

D

space is possible by determining the orien-

tation parameters of the camera with respect to the orthogonal

axes of 3

D

wireframe model. However, automatic estimation of

these parameters is critical, particularly for pan-tilt-zoom (

PTZ

)

surveillance cameras where the angular camera parameters

and the focal length change spontaneously with the camera

motion, while the position of the camera remains fixed. Our

research work is motivated by the problem of image-based

automatic localization of dynamic objects for augmented real-

ity applications using surveillance cameras (Figure 1). Using

virtual 3

D

environments populated with building models of

3

D

cityscapes and road networks, avatars of pedestrians and

vehicles detected from 2

D

video can be rendered in the 3

D

en-

vironments. In related works (Baklouti

et al

., 2009; Kim

et al

.,

2009), the transformation parameters to move from 2

D

to 3

D

object positions are manually established. We introduce a new

approach for automatically estimating the parameters of

PTZ

surveillance cameras relative to the virtual 3

D

scene models.

This would enable the transformation of image positions of

moving objects into the virtual 3

D

environment during times

of camera motion. Using the wireframes of 3

D

scene models,

we match their 3

D

lines with corresponding 2

D

image lines.

Geomatics Engineering, GeoICT Lab, Department of Earth

and Space Science and Engineering, Lassonde School of

Engineering, York University, 4700 Keele Street, Toronto,

Ontario, M3J 1P3, Canada (

).

Photogrammetric Engineering & Remote Sensing

Vol. 81, No. 11, November 2015, pp. 847–859.

0099-1112/15/847–859

© 2015 American Society for Photogrammetry

and Remote Sensing

doi: 10.14358/PERS.81.11.847

Figure 1. Concept of video and 3D city models for augmented reality surveillance.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

November 2015

847