By optimally fitting the 3

D

model to the image frames from

PTZ

video, the camera parameters can be estimated.

The automatic estimation of camera parameters is a chal-

lenging problem. This is particularly true for

PTZ

cameras where

orientation parameters and focal length continuously change.

PTZ

cameras rotate around two axes. One axis, usually a vertical

one, allows for horizontal panning. The second axis, usually

the horizontal one, allows for vertical panning. In the

PTZ

axes

system, the direction of the optical axis is defined by the verti-

cal and horizontal angles. However, the direction of the optical

axis of the camera with respect to the 3

D

wireframe coordinate

system can be determined by estimating the three Euler angles

defining the angular displacement of the two systems.

Since

PTZ

cameras are mounted to a fixed position for most

surveillance applications, the camera location / translation is

considered to be a known and fixed parameter within the 3

D

model coordinate frame. However, the focal length is a function

of the

PTZ

camera’s zooming capabilities, while the pose angles

are a function of its rotations. Hence, we define our unknown

camera parameters to be the three rotational pose angles and

camera focal length. The standard collinearity equations are

used for the 3

D

to 2

D

camera perspective camera model, that is,

(a) the calibrated principal point is assumed to be at the image

center, and (b) lens distortion has not been considered.

Related Works

The challenge of 2

D

to 3

D

feature matching is a coupled prob-

lem. The first one being “the correspondence problem,” i.e.,

automatically knowing which feature matches to its corre-

sponding counterpart in 2

D

or 3

D

space. The second problem

is “the transformation problem,” i.e., the unknown parameters

required to map 3

D

features to their corresponding 2

D

features

or vice versa.

We use line segments instead of points due to their pre-

dominance in “built-up” scenes consisting mainly of building

structures. Feature matching using linear primitives has been

well utilized in photogrammetric computer vision, albeit from

the 2

D

image to 2

D

image matching-perspective (Schmid and

Zisserman, 1997; Taylor, 2002). However, there has been some

work in matching 3

D

model lines with 2

D

image lines (Habib

et al

., 2003; Jaw and Perny, 2008). Our work contributes to

this progression with a newly proposed 3

D

to 2

D

line match-

ing framework.

In the early 1980’s, numerous feature matching strategies

such as

RANSAC

(Fischler and Bolles, 1981) were developed

when model-based object recognition systems came into use.

In recent years, with the increasing use of machine learning

theory, appearance-based object recognition (Roth and Winter,

2008) has somewhat superseded model-based object recog-

nition. However, with the advent of new data acquisition

technologies such as airborne and terrestrial laser scanning

systems, databases of stored 3

D

geospatial models are read-

ily available. This facilitates the use of model-based vision

techniques for various applications.

Using the classical interpretation tree method (Grimson

and Lozano-Perez, 1987), Aider

et al

. (2005) put forward a

model-based line matching solution to address online local-

ization for a mobile indoor robot equipped with a single-view

camera. Wang and Neumann (2009) presented an automatic

registration solution of aerial images to airborne lidar data

for generating photo-realistic 3

D

models. Three extracted

line segments were linked together to form a so-called “

3CS

”

(3 connected segments) feature and were used in the match-

ing process. They proposed a “two-level

RANSAC

” algorithm,

which combined a local and global

RANSAC

solution for the

registration. Our paper addresses the automatic co-registration

of

PTZ

video images with 3

D

CAD

models. We apply a model-

based vision approach for the automatic retrieval of the

continuously changing focal length and angular parameters of

the

PTZ

image sequence.

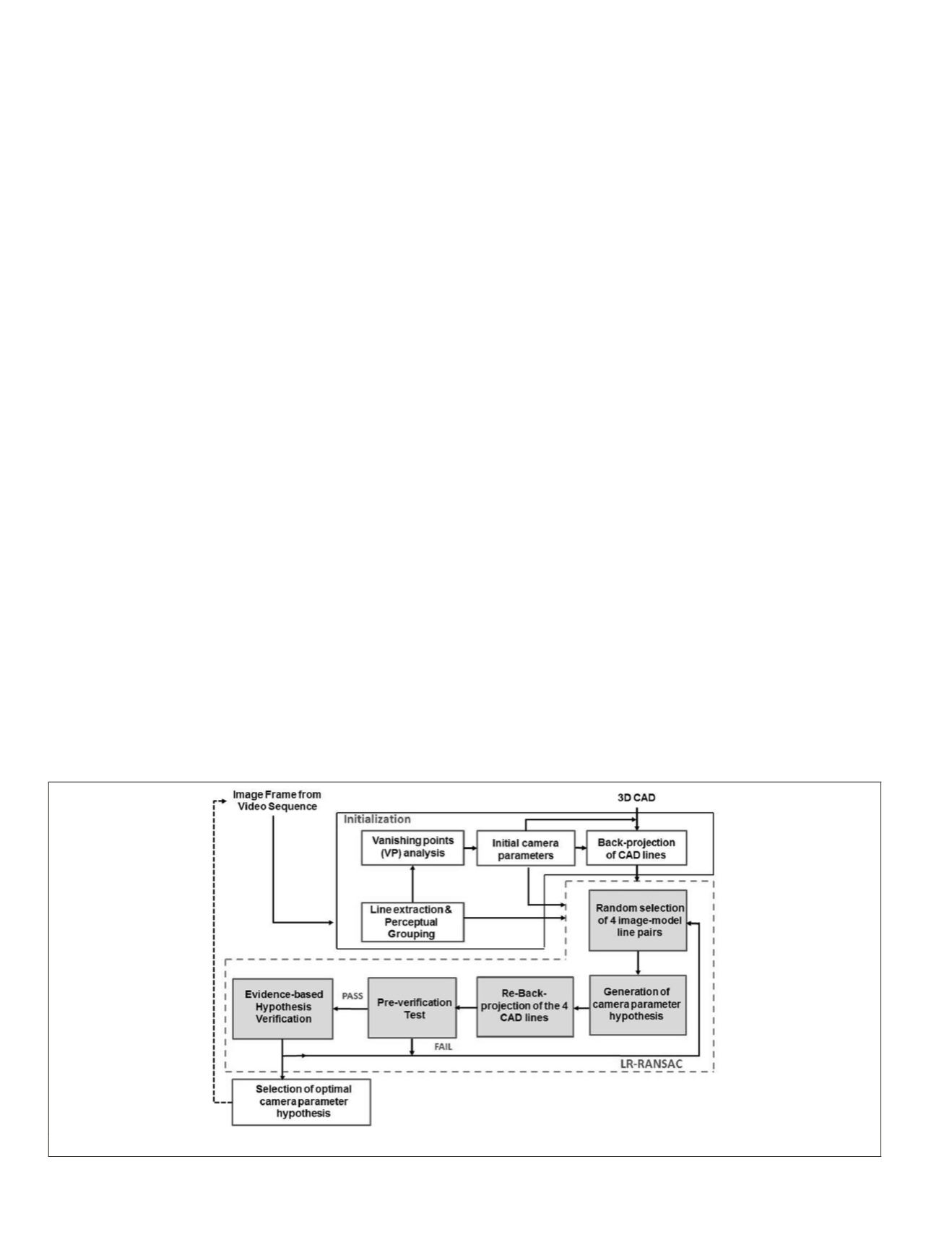

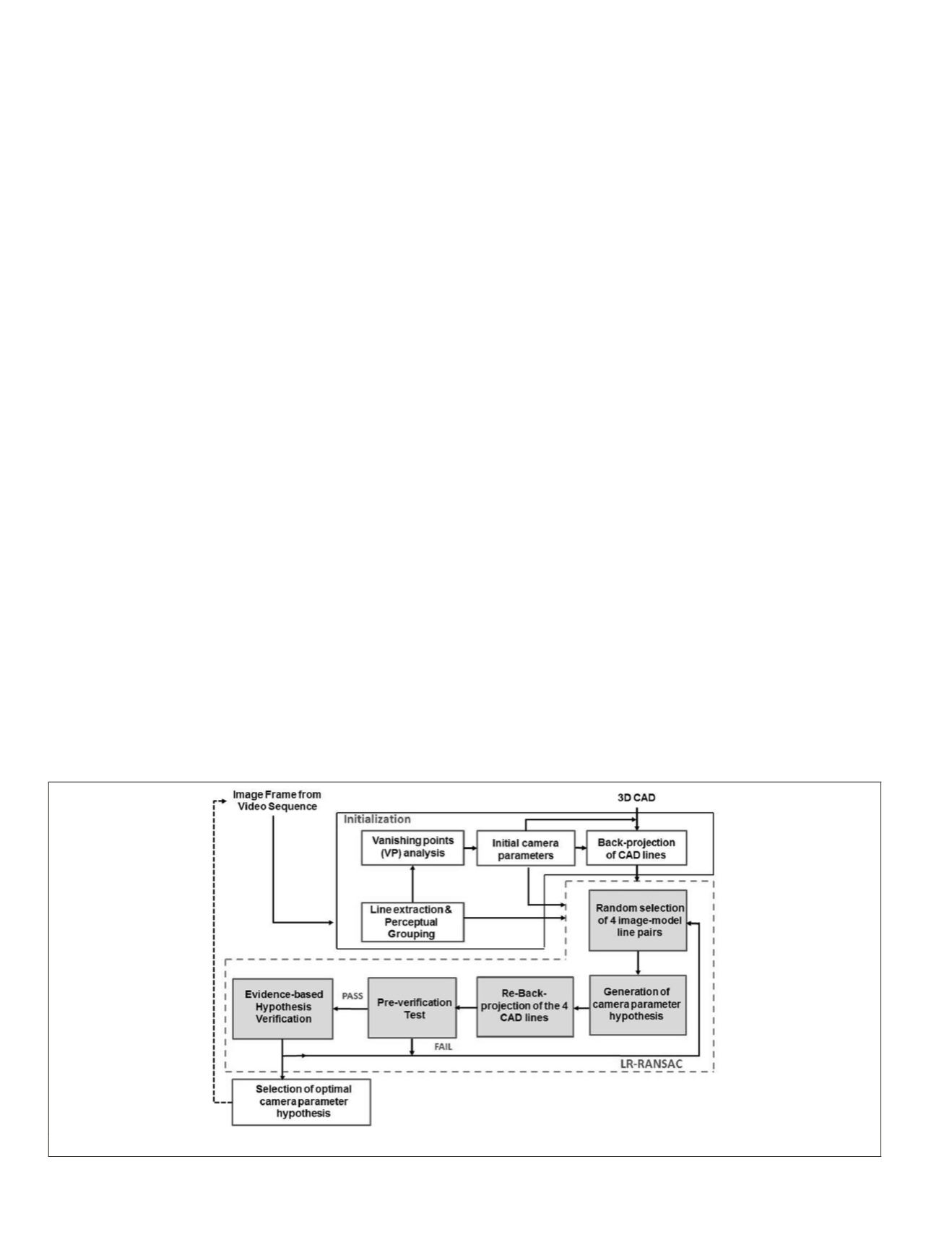

Methodology

The mapping and thus registration of a 3

D

CAD

model with

PTZ

video images is established through the estimation of

camera focal length and rotation angles for each image frame

of the surveillance video sequence. Figure 2 illustrates the

proposed methodology.

The proposed approach consists of two main stages: the

initialization and the Line-based Randomized

RANSAC

(

LR-

RANSAC

). During the initialization stage initial camera param-

eters are estimated based on the vanishing point geometry,

and thus enabling the back projection of the wireframe model

lines

LM

into image space. During this stage the image lines

LI

are also extracted. To obtain a suitable registration between

the wireframe model and image lines, explicit correspon-

dences must be established between

LM

and

LI

. Based on this

Figure 2. General framework of proposed method

848

November 2015

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING